Historic Weather Data API: A How-to for Accurate Analysis

Oct 07, 2024

Climate data has emerged as one of the most critical tools of data analysis for researchers, organizations, or anyone seeking to undertake fundamental analysis in the current and past periods. In this way, organizations get some important ideas about the possible forecast, thus they can prepare their actions based on the historical weather conditions. Tomorrow.io API opens the door to this treasure trove of information and gives access to histories of weather data, temperature, precipitation, and other relevant meteorological parameters.

This article will explain how readers can use Tomorrow.io’s historic weather data API for analysis in the most accurate manner. It will discuss the features of the API, describe how to use the python language and pandas package to construct a data pipeline to collect the weather data and exhibit the ways of analyzing the gathered data. At the end readers will be able to grasp on how to analyze historical weather information to be used as a tool in their data analysis endeavors as well as infuse decisive outcomes related to trends in weather patterns.

Exploring Tomorrow.io's Historical Weather Data

Historical Weather Data API from Tomorrow.io provides complete weather data for the past and is proved to be effective for analyzing. This powerful tool can be used to supply a client with another weapon of mass data by providing them with information on the past weather that is historically accurate so as to help a client make informed decisions depending on what the current trend is.

Types of Available Data

The Tomorrow.io weather API is numerous and covers different parameters like temperature, wind speed, humidity, cloud coverage, precipitation, etc. Clients get over 80 layers of weather data that give a clear picture of the past climate. They are necessary in different areas, for instance in establishing the current state of the climate, in decisions on practical activities.

Temporal and Spatial Resolution

Another strong advantage is that Tomorrow.io provides historical weather data with as far as 2000 as a beginning point. Users can also obtain the data with the time intervals starting from minute and ending with data for the last seven hours, including hourly and daily data. In addition, the API makes it possible to search for historical timelines of exactly up to 20 years.

Spatially the API supports point locations, polygons of area up to 10000 Km2 and polylines of length 2000 Km. This flexibility allows the users to forecast weather at certain localities as well as regions which can be a whole city or an entire country.

Data Quality and Accuracy

About Tomorrow.io claims it is all about delivering accurate meteorological data, particularly historical weather data. This company’s data is checked and commonly believed in by other data scientists, people working in meteorology, and most of the leading enterprise companies. Such a piecemeal validation gives the user sufficient confidence in the figures for analytical and decision making purposes.

The historical archive is generated from a reanalysis method where a four-dimensional variational assimilation method is used in combination with short-range weather forecast data and observations. By integrating historical weather data of this nature, users can carry out trend analysis, verify previous occurrences and improve the accuracy of their forecasts.

Building a Weather Data Pipeline

To construct a robust weather data pipeline, developers need to focus on three key aspects: defining how the data will be collected and placed in the database, creating scripts for pulling data and for updating data on a frequent basis. Thus, historical data of the weather as well as the weather forecast is always available for analysis to make sure that consistency is obtained.

Designing the Data Flow

When it comes to building a weather data pipeline, the first essential thing is to determine how the data should flow. This is the process of laying down patterns that outline how data will flow from its source to its end users. For weather data this could include identifying them such as Tomorrow.io API and defining the data storage like a database or a cloud storage service.

When ‘locating’ the data flow it is necessary to pay regard to the density of the data and its updates, the kinds of weather parameters to be accrued. These may refer to temperature, rainfall, geographical location in terms of latitude and longitude and any other climatological parameters as deemed necessary. In this way, developers are assured that the amount of data that has to be processed and stored can fit or is well handled within the pipeline.

Implementing Data Retrieval Scripts

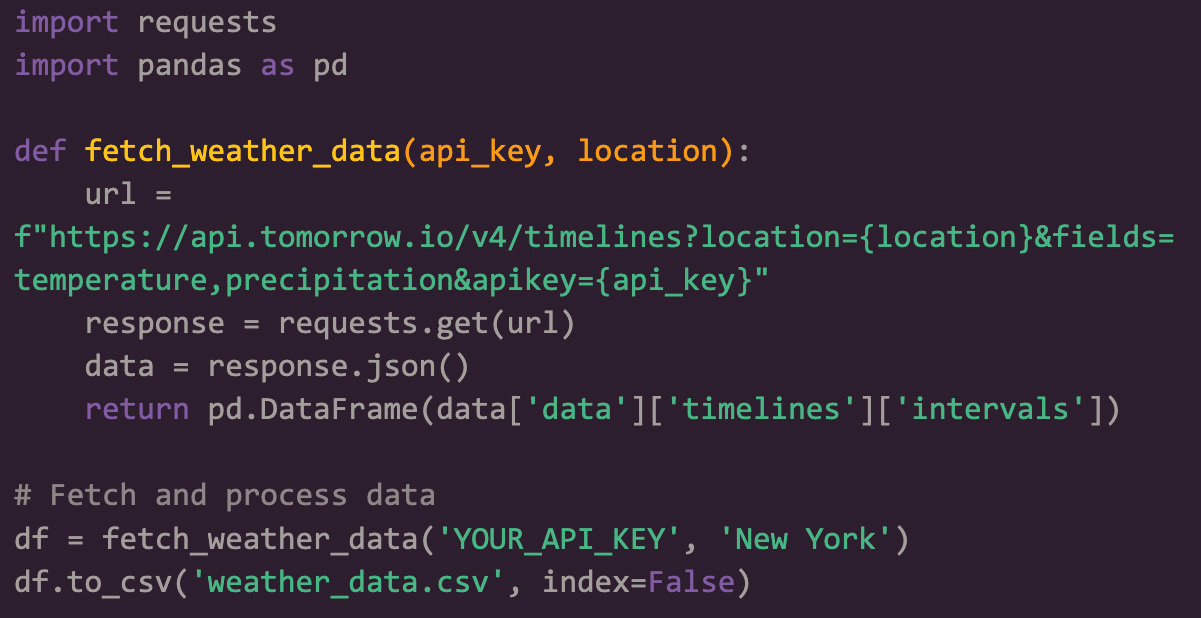

After that using the conceived data flow, the next agenda is the creation of data retrieval scripts. Python is widely used for this task since it has a rich set of libraries and is easy to program with. There are libraries availed which may be used to make data manipulation and analysis faster and easy such as Pandas.

A typical data retrieval script might involve the following steps:

-

Making API requests to fetch weather data

-

Parsing the JSON response

-

Transforming the data into a suitable format (e.g., CSV)

-

Storing the processed data in the chosen storage solution

Here's a simplified example of how a data retrieval script might look:

Scheduling Regular Data Updates

To ensure that there is constant availability of weather data a common practice could be used in updating the weather data pipeline. This guarantees that the current weather conditions and the previous ones recorded earlier are constantly in place for evaluation.

Apache Airflow can be employed or cron jobs to schedule the running of particular data retrieval scripts at a particular time. For instance, a script could be programmed to perform at day level to trigger new weather updates, or at an hourly basis to get up-to-date weather information.

In this way, those three critical components of weather data pipeline design – the data flow design, creating data retrieval scripts, and scheduling the pipeline updates – will ensure weather data becomes reliable and efficient for developers. This pipeline will serve as a stable basis for different apps starting from the weather widgets and up to the complex data analysis projects.

Leveraging Weather Data for Insights

Review of historical weather data can be fit to be useful for a number of sectors and users. Higher level analysis enables the researcher to detect relationships, trends and patterns whose implications would be useful in strategic business planning as they enhance predictor accuracy.

Correlation Analysis

Correlation analysis is also useful when more than two forms of weather data need to be compared and used to explain a certain result. For example, there were relationships between the climatic changes and the transmission of diseases such as COVID 19. Some of the modifiable variables that have been deemed potential to affect the number of cases and deaths from the virus include; temperature, and humidity. By studying such a correlation, researchers are able to have an understanding about how perhaps weather conditions may influence health of the public and the possible ways to prevent it.

Trend Identification

The process of pattern matching to historical data requires analysis to forecast the number and frequency of extreme weather conditions. Scientists again employ different statistical techniques to either identify or predict trends and patterns in temperature, rainfall and other such conditions. Mann-Kendall test and linear regression analysis is normally used in determining the trend’s significance. But, such factors as quality of data, sampling errors, and non-stationarity of the climate system have to be taken into account as well.

Predictive Modeling with Weather Data

Advanced gradient boosting to increase the Weather Forecasting Accuracy and Efficiency has been improved by machine learning known as ML. By using such ML models one would be able to analyze large chunks of data from different sources such as weather stations, satellite imagery and Radar data among others all in an attempt to make better predictions. For instance, a seemingly ordinary family of ML algorithms known as random forests produces precise predictions from multiple decision trees formulated from various subsets of the data and attributes. These advanced modeling techniques help the meteorologist prepare and disseminate better and accurate weather forecasts to affect dependent industries and operations.

Conclusion

Tomorrow.io API is a useful tool that provides historical weather data that can be of great help to different industries and people. Facilitating the analysis of long-term or trends in the weather patterns it has up to 20 years of data that makes it precise. The Targeted API’s flexibility concerning temporal and spatial resolution, and the high-quality measurements presented, make the API useful for various researchers, businesses, and organizations that intend to base their decisions on the past climate.

Through strong data integration of weather data as well as application of sophisticated analysis procedures users stand to benefit fully from rich historical data from Tomorrow.io. It is possible to obtain from a mere correlation analysis all the way up to trend identification, and predictive modeling among other possibilities. Such knowledge can be applied to the promotion of policy approaches within fields as diverse as public health and agriculture and urban structures. When it comes to climate change, understanding about how the weather has changed within the past will be critical for success in increasingly complex conditions.